“The network is the computer,” coined by John Gage of Sun Microsystems back in 1984, proved incredibly insightful. This idea is re-emerging, this time within the SoC realm. Functions in a chip that communicate with each other—not through simple wires but through complex network elements such as switches, protocol converters, packetizers, and so on—are not so different from the set of computers communicating through a network within a cabinet, or a room, back in 1984.

Before SoCs, to connect from A to B on a board, engineers could move data through a bunch of wires. The biggest worry was about managing wire length and ensuring that A and B use the exact same protocol for communication, but that was about it. The real action was in the compute elements. Wiring between these precious components was then a simple design task.

From wires to active bus logic

As SoC capabilities grew, it became possible to consolidate whole boards and even bigger systems onto a single chip, all governed by a central processing unit (CPU) or cluster of processors. Each CPU ran software to orchestrate the system to handle functions that require the flexibility software enables. Solutions from companies like Arm, which provided processors, grew rapidly. Other suppliers quickly followed, including intellectual property (IP) providers for functions other than CPUs. At first, by offering peripheral IPs to handle many interface protocols and then evolving to specialized processors for wireless communication, graphics processing, audio, computer vision, and artificial intelligence (AI). Adding to this list are on-chip working memories, cache memories, double data rate (DDR) interfaces to off-chip or off-die DRAM, and more.

There are lots of great functions ready to be integrated into advanced SoCs, but how would they communicate? Not through direct connections because the whole chip would be covered in wires. CPUs and memories would slow to a crawl in figuring out what to service next. Instead, all that traffic must be routed through highways, with metered on-ramps. If an IP wants to talk to the CPU, or vice-versa, it must wait its turn to get onto the highway.

The bus was no longer just dumb wires. There was logic to monitor what gets on and what to allow next, plus queuing supported data to flow between the domains operating at different speeds. Pipelining registers helped span large distances while meeting timing constraints. Many integration teams called this a “bus fabric,” weaving connections through control logic, muxing, registers, and queueing first in, first outs.

Bus architectures evolve

Now that there was an architecture for the bus fabric, it became possible to imagine different architectures for different purposes. The Advanced Microcontroller Bus Architecture (AMBA) family alone had multiple flavors, each with its own advantages and constraints. Likely, several are needed in a complex SoC. But there was another very different type of technology, the network-on-chip (NoC), which is quite different in concept and doesn’t tightly couple interconnect communications and physical transport, opening up new architectural options. I’ll talk more about the relative advantages of these options in my next blog.

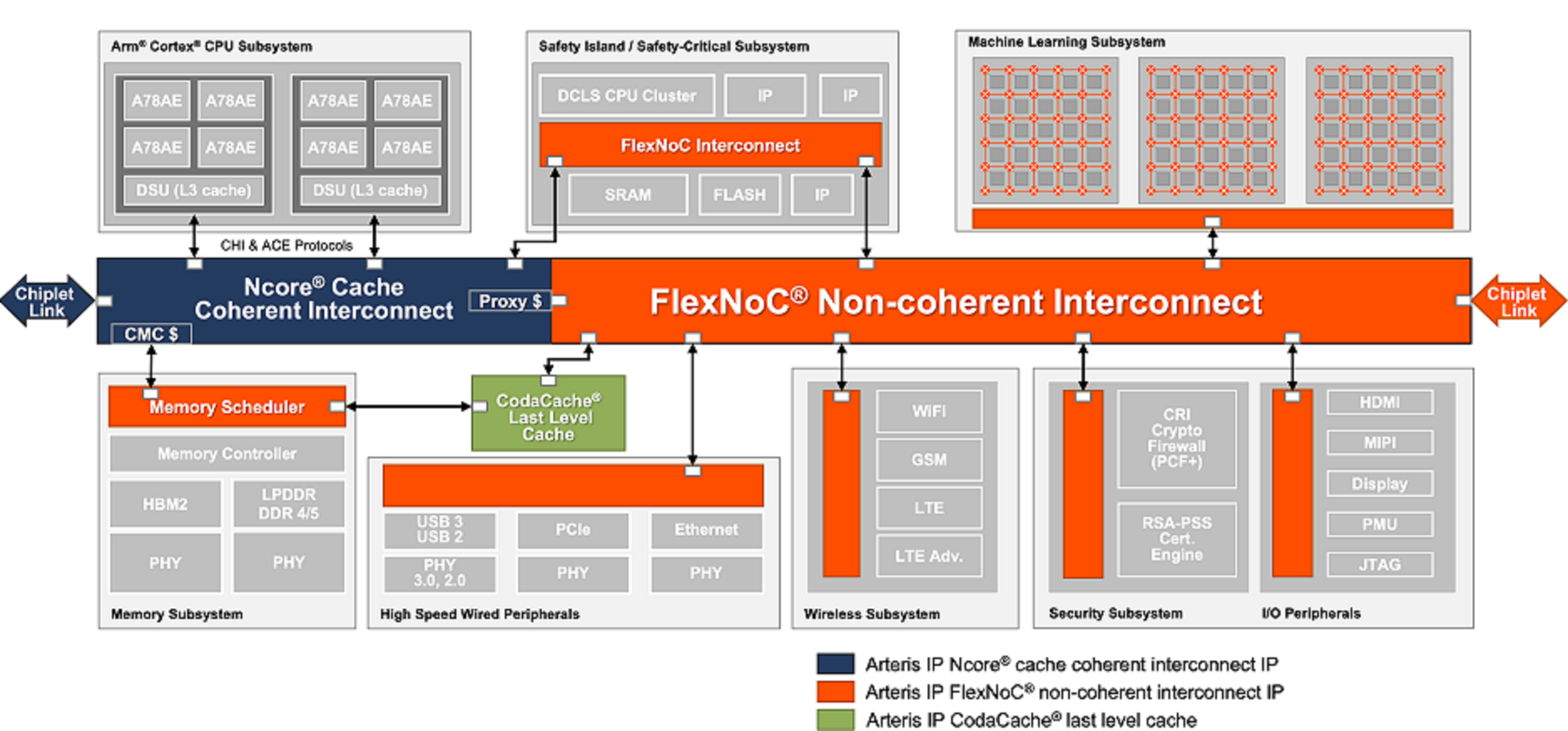

The NoC interconnect is the SoC architecture. Source: Arteris IP

The NoC interconnect is the SoC architecture. Source: Arteris IP

There is another important consideration. A typical SoC would now be built around many third-party IPs and some integrators’ own IP with custom advantages. But how much is the advantage? Competitors can buy the same third-party products, diluting possible differentiation. The challenge then becomes how effectively the design teams can integrate the SoCs.

The good news is that there is room to do precisely that. Bandwidth, throughput, quality of service (QoS), power, safety, and cost are all determined by integration. These factors are influenced by the implemented communication architecture, most likely an NoC.