In today's rapidly evolving technological landscape, hardware accelerators have become essential components for applications requiring intensive computing power. Among these technologies, Field-Programmable Gate Arrays (FPGAs) and Graphics Processing Units (GPUs) stand out as leading solutions for high-performance computing (HPC) and artificial intelligence (AI) applications. But which one should you choose for your specific workload? This comprehensive comparison will guide you through the critical differences between FPGAs and GPUs, helping you make informed decisions about your hardware acceleration needs.

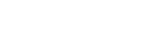

Overview comparison of FPGA and GPU architectures (Source: Logic Fruit Technologies)

Today, we'll explore the fundamental architectural differences between FPGAs and GPUs, their performance characteristics, energy efficiency profiles, and ideal application scenarios. Whether you're developing AI algorithms, designing embedded systems, or optimizing high-performance computing workloads, understanding these differences is crucial for selecting the right technology for your project.

Architecture: Understanding the Core Differences

What is an FPGA?

A Field-Programmable Gate Array (FPGA) is an integrated circuit with a programmable hardware fabric that allows it to be reconfigured to behave like another circuit. Unlike traditional processors with fixed architectures, FPGAs can be reprogrammed at the hardware level to implement custom logic and functionality for specific tasks.

Core FPGA Components:

- Configurable Logic Blocks (CLBs)

- Memory Blocks

- Interconnect Matrix (Routing Resources)

- Input/Output Blocks

- Digital Signal Processing (DSP) Units

FPGAs feature a sea of configurable logic blocks connected through a programmable interconnect network. This flexibility allows designers to implement custom hardware accelerators tailored to specific algorithms or workloads, enabling hardware-level parallelism that can be reconfigured as needs change.

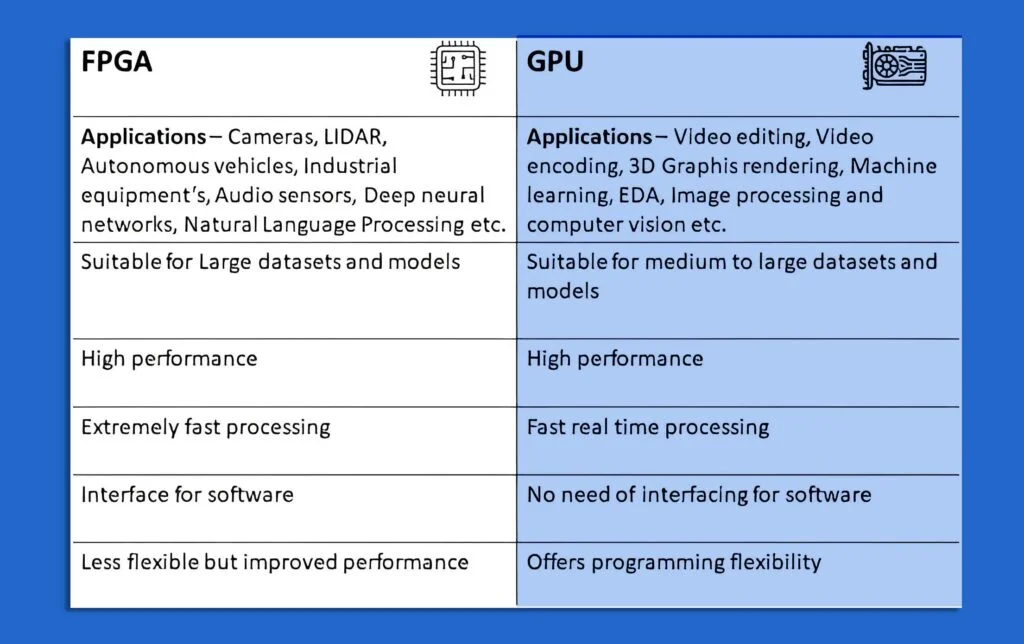

What is a GPU?

A Graphics Processing Unit (GPU) was originally designed to render video and graphics but has evolved into a powerful parallel computing engine. Modern GPUs contain thousands of small, efficient cores designed to handle multiple tasks simultaneously, making them ideal for workloads with high data parallelism.

Core GPU Components:

- Streaming Multiprocessors (SMs)

- Global Memory (GDDR/DDR)

- L2 Cache

- Memory Controller

- Interconnect Network

- PCIe Interface (connects to CPU)

GPUs excel at performing the same operation across large datasets in parallel. Their architecture is optimized for throughput rather than latency, which makes them particularly effective for workloads like deep learning training, where massive matrix operations are common.

Comparison between CPU and GPU architectures (Source: Intel)

Key Architectural Differences

| Feature | FPGA | GPU |

|---|---|---|

| Architecture Type | Configurable logic blocks, customizable | Fixed-function cores optimized for parallel processing |

| Flexibility | Highly customizable at hardware level | Limited customization, fixed pipeline |

| Programming Model | Hardware Description Languages (HDLs), High-Level Synthesis (HLS) | CUDA, OpenCL, DirectCompute, TensorFlow, PyTorch |

| Latency | Low (customized pipelines) | Moderate (general-purpose cores) |

| Throughput | Moderate | High |

| Memory Architecture | Distributed memory blocks, customizable memory hierarchy | Unified memory architecture, high-bandwidth GDDR memory |

Performance Comparison: Speed, Latency, and Throughput

When comparing FPGAs and GPUs, performance characteristics vary significantly depending on the workload. Let's break down these differences across various performance metrics:

Raw Computing Power

GPUs typically outperform FPGAs in terms of raw throughput for operations like floating-point calculations. For instance, modern high-end GPUs can deliver over 100 TFLOPs (trillion floating-point operations per second) for single-precision calculations, whereas high-end FPGAs might reach around 10 TFLOPs. However, this comparison doesn't tell the whole story, as FPGAs can be optimized for specific data types and algorithms.

"We find that for 6 out of the 15 ported kernels, today's FPGAs can provide comparable performance or even achieve better performance than the GPU, while for the remaining 9 kernels the GPU still delivers better performance."

- Study from Simon Fraser University

Latency and Real-Time Processing

FPGAs excel in low-latency applications where deterministic response times are crucial. Their ability to create customized hardware pipelines means they can process data with minimal delay, making them ideal for:

- High-frequency trading systems

- Real-time signal processing

- Network packet processing

- Time-sensitive control systems

GPUs, while powerful, typically introduce higher latency due to their memory architecture and programming model. However, they compensate with exceptional throughput, processing massive amounts of data simultaneously.

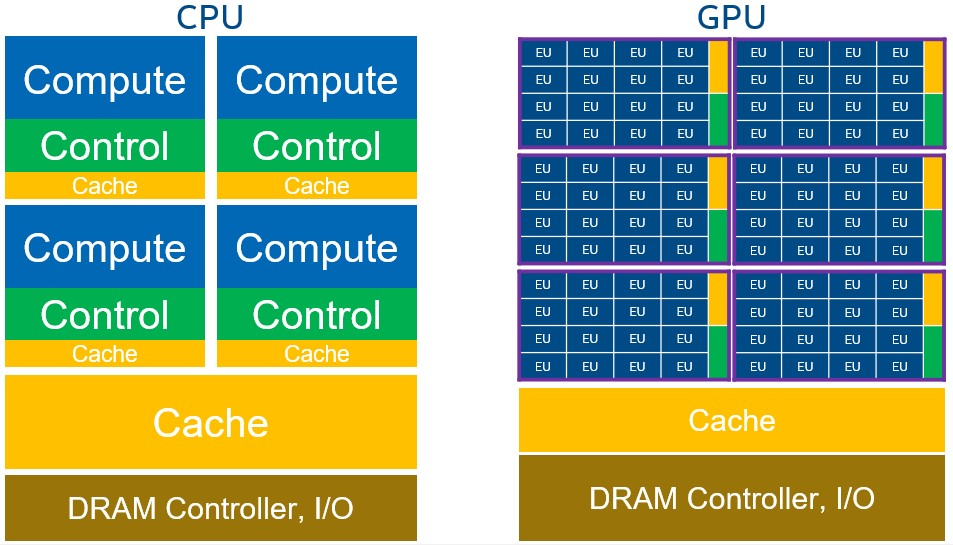

Benchmarking Results

Performance comparison of FPGA vs GPU by algorithm type (Source: Haltian)

As shown in the benchmarking results above, performance varies significantly based on the specific algorithm. FPGAs outperform GPUs in several categories including:

- Pattern matching and regular expressions

- Random number generation

- Sparse matrix operations

- Certain cryptographic functions

Meanwhile, GPUs demonstrate superior performance in:

- Dense matrix multiplication

- Convolutional neural networks

- Scientific simulations

- Image and video processing at scale

Data Size Impact on Performance

An interesting finding from research is that the relative performance advantage between FPGAs and GPUs can shift depending on data set size. FPGAs often excel with smaller data sets, where their lower latency provides an advantage. As data sizes increase, GPUs' massive parallelism becomes more beneficial, allowing them to process large batches more efficiently.

Energy Efficiency: Power Consumption Analysis

In an era of increasing concerns about energy consumption, power efficiency has become a critical consideration when selecting computing hardware for AI and HPC workloads. FPGAs and GPUs show marked differences in this area.

Power Consumption Profiles

Research consistently shows that FPGAs are substantially more energy-efficient than GPUs for many computations, often consuming 3-10 times less power for equivalent workloads. This efficiency stems from their ability to implement custom circuits that utilize only the necessary components for a specific task.

"Another study shows that FPGA can be 15 times faster and 61 times more energy efficient than GPU for uniform random number generation."

Performance-per-Watt Comparison

When examining performance-per-watt metrics, FPGAs often demonstrate significant advantages:

| Task | FPGA Energy (W) | GPU Energy (W) | FPGA Advantage |

|---|---|---|---|

| Matrix Multiplication | 50 | 200 | 4x more efficient |

| Convolutional Neural Network | 30 | 150 | 5x more efficient |

| Scientific Simulation | 70 | 250 | 3.6x more efficient |

Energy Efficiency in Different Deployment Scenarios

The energy efficiency advantage of FPGAs makes them particularly attractive for:

- Edge Computing: Where power constraints are significant and battery life is critical

- Data Centers: To reduce operational costs and carbon footprint

- Embedded Systems: Where thermal management is challenging

- IoT Devices: Where energy consumption must be minimized

GPUs, while less energy-efficient on a per-operation basis, may still be more cost-effective for certain high-throughput workloads where their raw performance outweighs power considerations. Modern GPU architectures have also seen significant improvements in power efficiency through specialized cores for AI workloads.

AI and High-Performance Computing Applications

Both FPGAs and GPUs play crucial roles in accelerating AI workloads and high-performance computing applications, but their strengths make them suitable for different scenarios.

FPGA Advantages for AI

FPGAs offer several key advantages for specific AI applications:

FPGA AI Strengths:

- Low-latency inference: Critical for real-time applications like autonomous vehicles and industrial automation

- Custom precision: Ability to implement custom bit-width operations optimized for specific neural networks

- Adaptability: Can be reconfigured as AI models evolve without hardware replacement

- Sensor fusion: Excellent at handling multiple input streams from different sensors

GPU Advantages for AI

GPUs have become the dominant platform for many AI workloads due to:

GPU AI Strengths:

- Massive parallelism: Ideal for training large neural networks with millions of parameters

- Mature ecosystem: Extensive library support through CUDA, TensorFlow, PyTorch, etc.

- High memory bandwidth: Essential for processing large training datasets

- Developer familiarity: Larger pool of developers familiar with GPU programming

The decision between FPGAs and GPUs for AI workloads often depends on whether you're prioritizing training or inference, as well as your specific performance requirements.

| AI Workload Type | Recommended Hardware | Reasoning |

|---|---|---|

| Model Training (Large Scale) | GPU | Superior throughput, ecosystem support, high memory bandwidth |

| Real-time Inference | FPGA | Low latency, energy efficiency, custom data path optimization |

| Edge AI Deployment | FPGA | Power efficiency, reconfigurability, custom I/O interfaces |

| Batch Inference | GPU | High throughput for processing multiple inputs simultaneously |

High-Performance Computing Applications

Beyond AI, both technologies serve critical roles in other HPC applications:

FPGA HPC Applications:

- Financial modeling and high-frequency trading

- Real-time signal processing

- Genomic sequencing

- Network packet processing and security

- Hardware acceleration for specialized scientific algorithms

GPU HPC Applications:

- Computational fluid dynamics

- Molecular dynamics simulations

- Weather forecasting models

- Seismic analysis

- Large-scale visualization and rendering

Strengths and Weaknesses Analysis

FPGA Strengths

- Flexibility and reconfigurability: Can be reprogrammed for different applications without changing hardware

- Low latency: Custom hardware pipelines enable deterministic, real-time processing

- Energy efficiency: Optimized circuits consume significantly less power than general-purpose processors

- Custom I/O interfaces: Direct connection to various hardware interfaces without intermediate layers

- Long product lifecycle: Ideal for industrial and defense applications with decades-long support requirements

FPGA Weaknesses

- Development complexity: Requires specialized hardware design skills and longer development cycles

- Lower peak floating-point performance: Generally less raw compute power compared to GPUs

- Smaller developer community: Fewer resources, libraries, and expert developers available

- Higher initial development costs: Development tools and expertise can be expensive

- Limited memory bandwidth: Generally cannot match GPUs for high-throughput memory operations

GPU Strengths

- Massive parallelism: Thousands of cores for high-throughput computing

- High memory bandwidth: Optimized for moving large amounts of data quickly

- Mature software ecosystem: Extensive libraries and frameworks (CUDA, TensorFlow, PyTorch)

- Developer accessibility: Easier programming model and larger talent pool

- Cost-effectiveness for certain workloads: Better performance-per-dollar for compatible applications

GPU Weaknesses

- Higher power consumption: Less energy-efficient than FPGAs for many workloads

- Fixed architecture: Limited ability to optimize for specific algorithms

- Higher latency: Memory transfers and programming model introduce overhead

- Dependency on host CPU: Typically operates as a co-processor

- Limited I/O flexibility: Restricted connectivity options compared to FPGAs

Top 3 Hottest Selling FPGAs

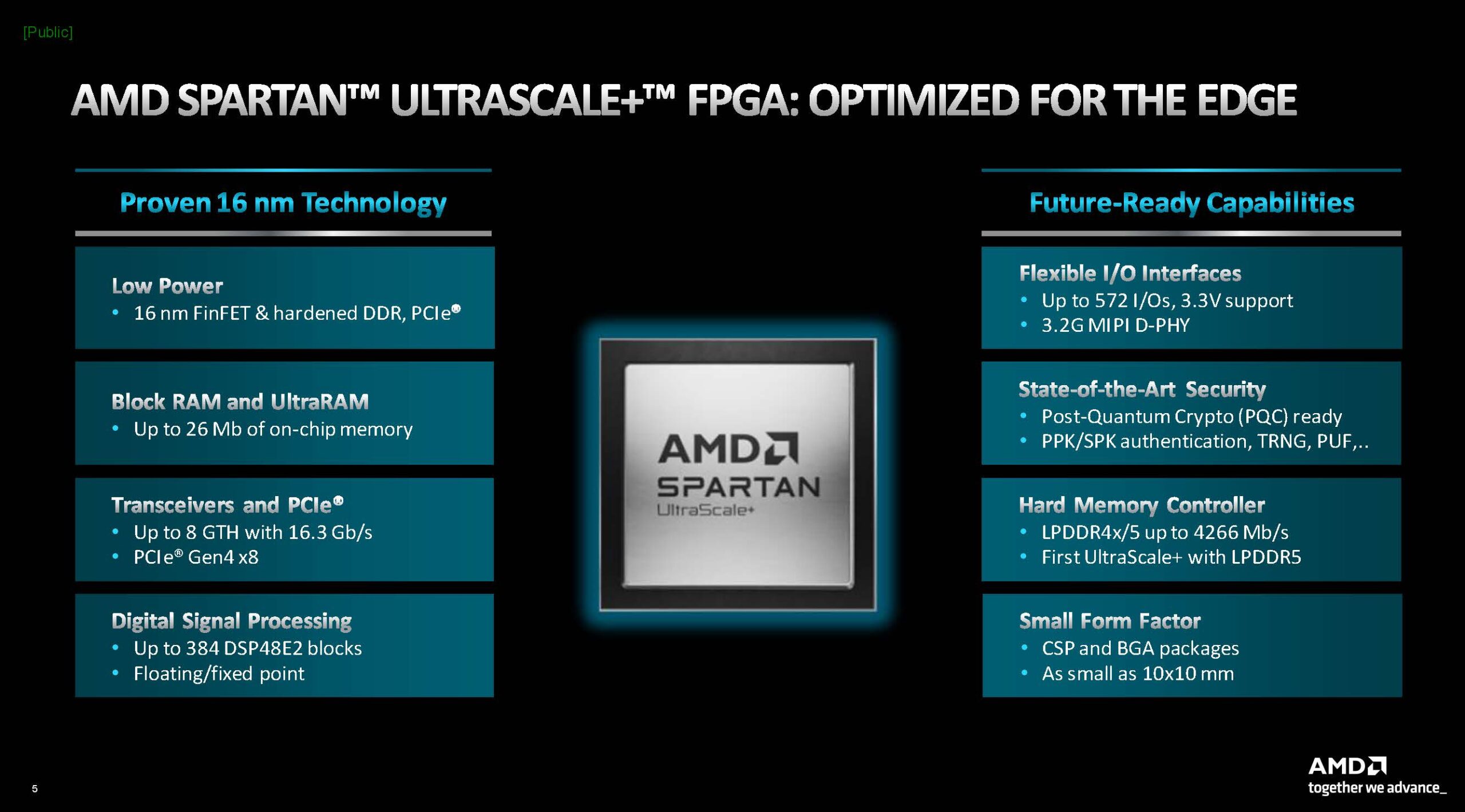

AMD Spartan™ UltraScale ™ FPGA

Package: BGA-676

Logic Elements: 308,437

I/Os: 292

Operating Temp: 0°C to 100°C

Supply Voltage: 850 mV

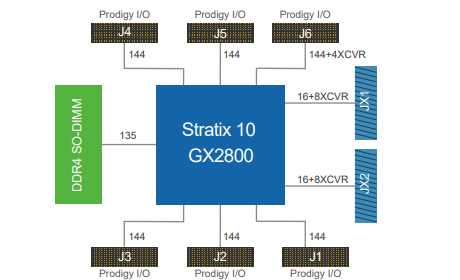

Intel® Stratix® 10 GX 2800 FPGA

Package: 2397-BBGA, FCBGA

Logic Elements: 2,800,000

I/Os: 704

Operating Temp: 0°C to 100°C

Supply Voltage: 0.77V to 0.97V

Lattice ECP5-5G FPGA

Package: 381-FBGA

Logic Elements: 84,000

I/Os: 205

Operating Temp: 0°C to 85°C

Supply Voltage: 1.045V to 1.155V

Common Misconceptions About FPGAs and GPUs

Misconception 1: FPGAs are always slower than GPUs

While GPUs often deliver higher raw throughput, FPGAs can outperform GPUs in specific workloads, particularly those requiring low latency or custom data paths. The performance comparison is highly dependent on the specific algorithm and implementation.

Misconception 2: FPGAs are too difficult to program

While traditionally FPGAs required expertise in Hardware Description Languages (HDLs), modern High-Level Synthesis (HLS) tools have significantly reduced the barrier to entry. Frameworks like Intel's OneAPI and AMD's Vitis AI are making FPGA development more accessible to software developers.

Misconception 3: GPUs are only useful for graphics and gaming

While GPUs originated for graphics rendering, modern GPUs are powerful general-purpose computing devices. The GPGPU (General-Purpose computing on Graphics Processing Units) revolution has transformed them into essential tools for scientific computing, AI, data analytics, and more.

Misconception 4: You must choose between FPGA or GPU

Many modern systems benefit from a heterogeneous computing approach, combining CPUs, GPUs, and FPGAs. Each processor handles the workloads best suited to its architecture, creating a more efficient overall system.

Checklist for Choosing Between FPGA and GPU

Consider these factors when deciding between FPGA and GPU for your project:

- Latency requirements: If real-time processing with deterministic response times is critical, FPGAs often provide an advantage.

- Development timeline: GPUs typically enable faster development cycles, especially if your team lacks hardware design expertise.

- Power constraints: For energy-limited applications like edge devices or data centers optimizing for efficiency, FPGAs typically consume significantly less power.

- Ecosystem compatibility: Consider existing software stacks, libraries, and frameworks that your application relies on.

- Data parallelism: Highly parallel workloads with uniform operations on large datasets tend to favor GPUs.

- Custom interface requirements: If you need direct connectivity to specialized hardware or sensors, FPGAs offer more flexibility.

- Budget constraints: Consider both upfront hardware costs and development costs (including time and expertise).

- Reconfigurability needs: If your application may need to adapt to changing requirements in the field, FPGAs offer runtime reprogrammability.

Real User Experience Stories

"We initially deployed our machine learning inference pipeline on GPUs, but after switching critical real-time components to FPGAs, we reduced system latency by 70% while cutting our power consumption in half. The development time was longer, but for our autonomous vehicle application, the performance gains were worth it."

- Senior Engineer at an Autonomous Vehicle Company

"Our research lab works on both FPGAs and GPUs for different AI projects. We've found that for training large models, nothing beats the raw throughput and ecosystem support of GPUs. However, for deploying efficient inference systems, especially at the edge, our FPGA implementations consistently outperform in terms of latency and power efficiency."

- AI Research Director at a Major University

"In our high-frequency trading operation, we moved from GPUs to FPGAs and saw a 90% reduction in processing latency. In our business, even microseconds matter, so this was a game-changer. The development cost was higher, but the competitive advantage justified the investment."

- CTO of Financial Technology Firm

Frequently Asked Questions about FPGAs vs. GPUs

FPGAs can excel at deep learning inference, particularly for low-latency or power-constrained applications. However, for training large neural networks, GPUs still generally offer superior performance due to their higher computational throughput and mature software ecosystem. Many organizations use GPUs for training and FPGAs for deployment in specific scenarios.

FPGA development typically requires more specialized skills and longer development cycles, resulting in higher initial development costs. GPU development leverages more mainstream programming models and a larger talent pool, often resulting in faster time-to-market. However, for specific applications, the performance or power efficiency benefits of FPGAs may offset the higher development investment over time.

Yes, it's technically possible to implement GPU-like functionality on an FPGA, but this approach isn't typically practical for general graphics processing. FPGAs can be programmed to perform parallel computations similar to GPUs, but they won't match the performance of dedicated GPUs for graphics rendering. However, FPGAs can be excellent for specialized accelerators that target specific computational patterns.

GPUs benefit from robust software ecosystems, including CUDA, OpenCL, and numerous deep learning frameworks like TensorFlow and PyTorch, which have built-in GPU support. FPGA development has traditionally been more complex, requiring hardware description languages. However, high-level synthesis tools and frameworks like Intel's OneAPI and AMD's Vitis are improving the FPGA development experience, though the ecosystem remains less mature than for GPUs.

For many edge AI applications, FPGAs offer advantages in terms of power efficiency, real-time processing capabilities, and customizable I/O interfaces. This makes them well-suited for deployment in constrained environments like IoT devices or autonomous systems. However, some modern edge-focused GPUs are specifically designed for these constraints and may be easier to develop for. The optimal choice depends on specific application requirements, power constraints, and development resources.

Conclusion: Choosing the Right Hardware Accelerator

The choice between FPGAs and GPUs isn't a matter of which technology is universally superior, but rather which is better suited to your specific application requirements. Here's a summary of the key considerations:

Consider FPGAs when:

- Low latency and deterministic timing are critical

- Power efficiency is a primary concern

- You need custom I/O interfaces or direct hardware connectivity

- Your application requires hardware-level customization

- Long-term deployment with potential field updates is expected

Consider GPUs when:

- Maximum computational throughput is required

- You're working with large-scale deep learning models

- Rapid development time is essential

- You need to leverage established software frameworks

- Your workload involves massive parallelism with regular patterns

In many cutting-edge systems, the optimal approach involves a heterogeneous computing architecture that combines CPUs, GPUs, and FPGAs, allowing each type of processor to handle the workloads best suited to its strengths.

As technology continues to evolve, we're seeing the boundaries between these technologies blur, with FPGA manufacturers improving programmability and GPU manufacturers increasing customization options. Understanding the fundamental differences outlined in this article will help you make informed hardware acceleration decisions regardless of how the technology landscape develops.

Have you implemented a project using FPGAs or GPUs? What factors influenced your decision? Share your experiences in the comments below!

What Do You Think?

We'd love to hear your thoughts:

- Have you used both FPGAs and GPUs in your projects? Which did you prefer and why?

- What specific applications do you think are best suited for FPGAs versus GPUs?

- Do you think the distinction between FPGAs and GPUs will blur as technology evolves?

- What challenges have you faced when working with either FPGAs or GPUs?